By Kaya Akyüz

In 2008, a group of political scientists published in the journal Science a study with the title Political Attitudes Vary with Physiological Traits [paywall]. This article suggested that there are differences between conservatives and liberals in how they blink and sweat in response to images shown to them. As it happens with many articles published in Science and Nature, the research received considerable media attention and still does. At a time liberal and conservative voters seem to be more polarized than ever, interest in possible biological differences between the two groups is unsurprising. But, is an article in the prestigious journal Science enough to assume the existence of such physiological differences between two political groups? It seems not.

Recently, other scientists tried to reproduce the 2008 study and were unable to confirm the findings. The interesting part is not that their results do not corroborate the initial study, but they also failed to get these results on the journal Science, which had published the original research. The researchers describe the response they received from Science: “About a week later [after the submission], we received a summary rejection with the explanation that the Science advisory board of academics and editorial team felt that since the publication of this article the field has moved on and that, while they concluded that we had offered a conclusive replication of the original study, it would be better suited for a less visible subfield journal.” Despite the authors’ protest, the journal rejected to send out the submission for peer review and did not take further action regarding either the original paper or the reproduction effort.

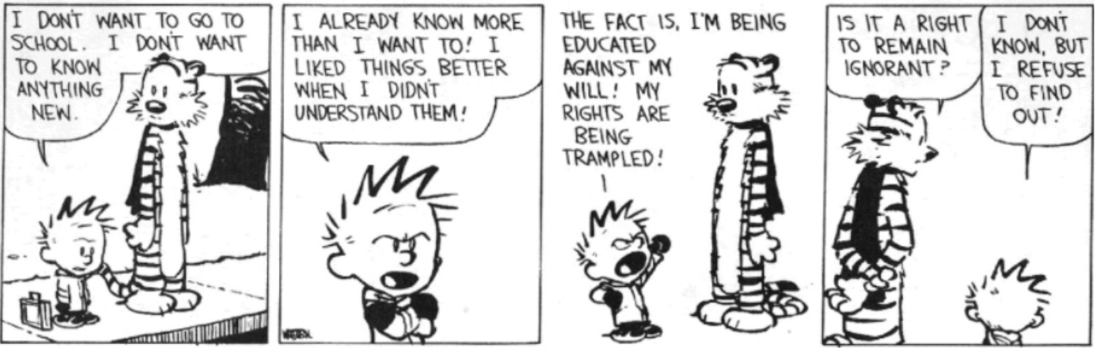

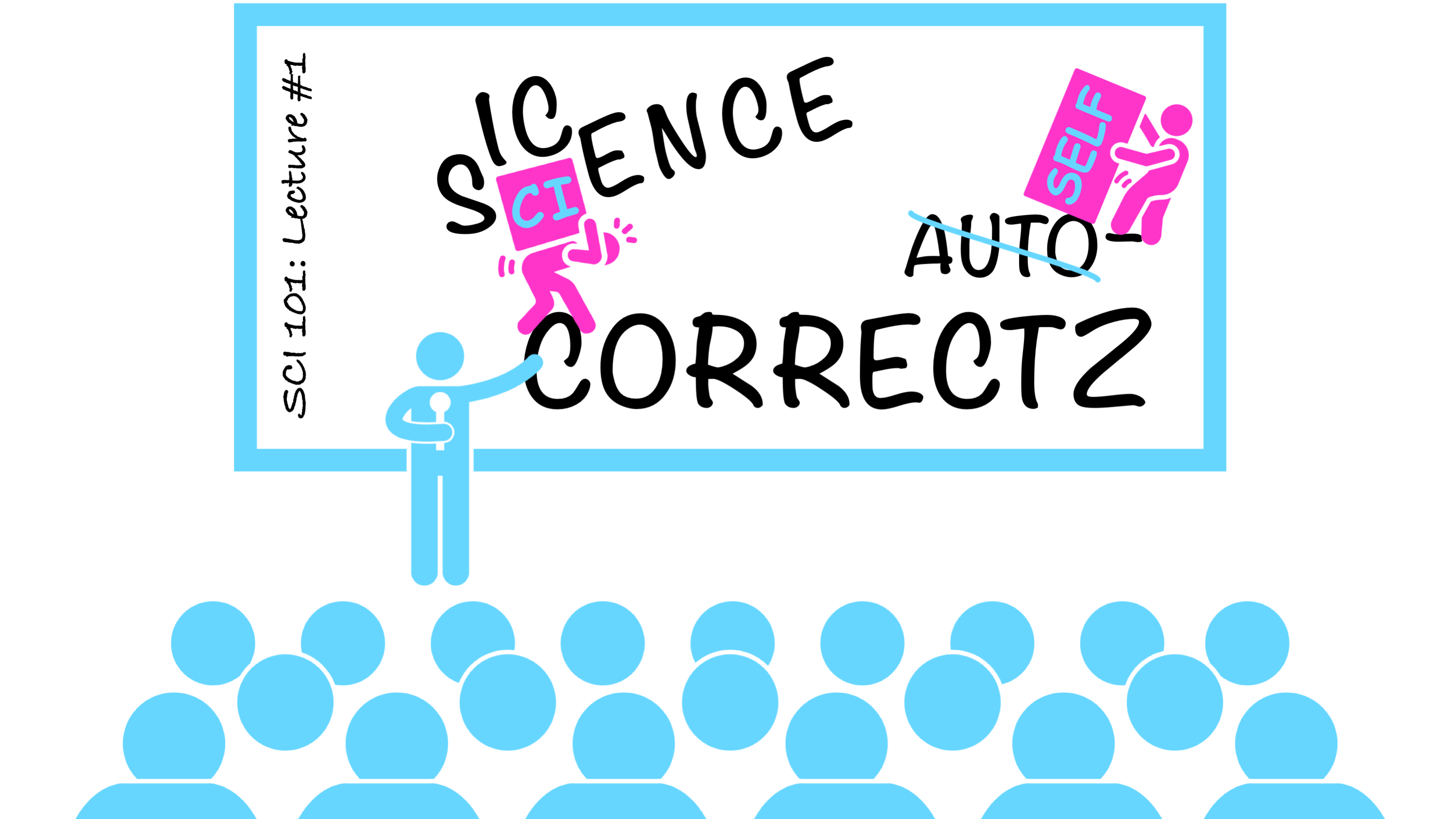

“Science is self-correcting” is a foundational assumption that contributes to the image of science and the authority of scientific knowledge. How does science self-correct? Sociologist of science, Robert Merton would have responded: By tackling similar kinds of questions as a community of disinterested individuals driven by organized skepticism. However, there are a few problems here: Academic journals do not value reproductions and replications of research as much as novel articles. Therefore, trying to validate research findings often becomes a risky effort since the resulting publications are rarely deemed original enough by journal editors as this case also suggests. Who would waste time for something they cannot publish? Afterall, scientists, among other things, want to advance their careers through publications as the infamous motto “publish or perish” suggests.

Failure of the self-correction mechanisms has at least two consequences, especially in the case of high-profile journals such as Nature and Science. First, through media attention, the study continues to reach large publics, who might assume it to be a verified claim, agreed upon by the scientific community even when the opposite may be true. Second, other scientists take the not-yet-reproduced or not reproducible studies as “facts” and do further research on that basis, which may turn entire fields into vicious circles. Once a knowledge claim gets published, especially in prestigious journals, it is difficult to prevent its spread even when the claim no longer holds (or the article is retracted). For instance, research has shown that more than thirty thousand articles in biomedicine were conducted with cell-lines contaminated with HeLa cells, making the findings mainly invalid. Although the contamination has been known for a long time, these thirty thousand articles are still cited in new research, the claims being repeated in half a million articles.

The fact that Science and Nature reach large and varied publics make them important for scientists and other members of the society. These journals have published numerous research that have become or contributed to seminal articles, methods, textbook content or new technologies. The past cases of failure to recognize groundbreaking research, such as the citric acid cycle that gave Hans Krebs a Nobel prize, and the push to be at the frontline might be driving these journals’ publication decisions. However, doesn’t their importance make these journals, or any other journal, responsible for giving at least the same opportunity to scientists, who claim published findings do not hold to scrutiny? Aren’t the academic journals responsible to correct the knowledge claims that they propagate? At a time we increasingly think and talk about responsibility in science, shouldn’t we think of ways to ensure that the most prominent journals have to abide by the tacit rules of science?

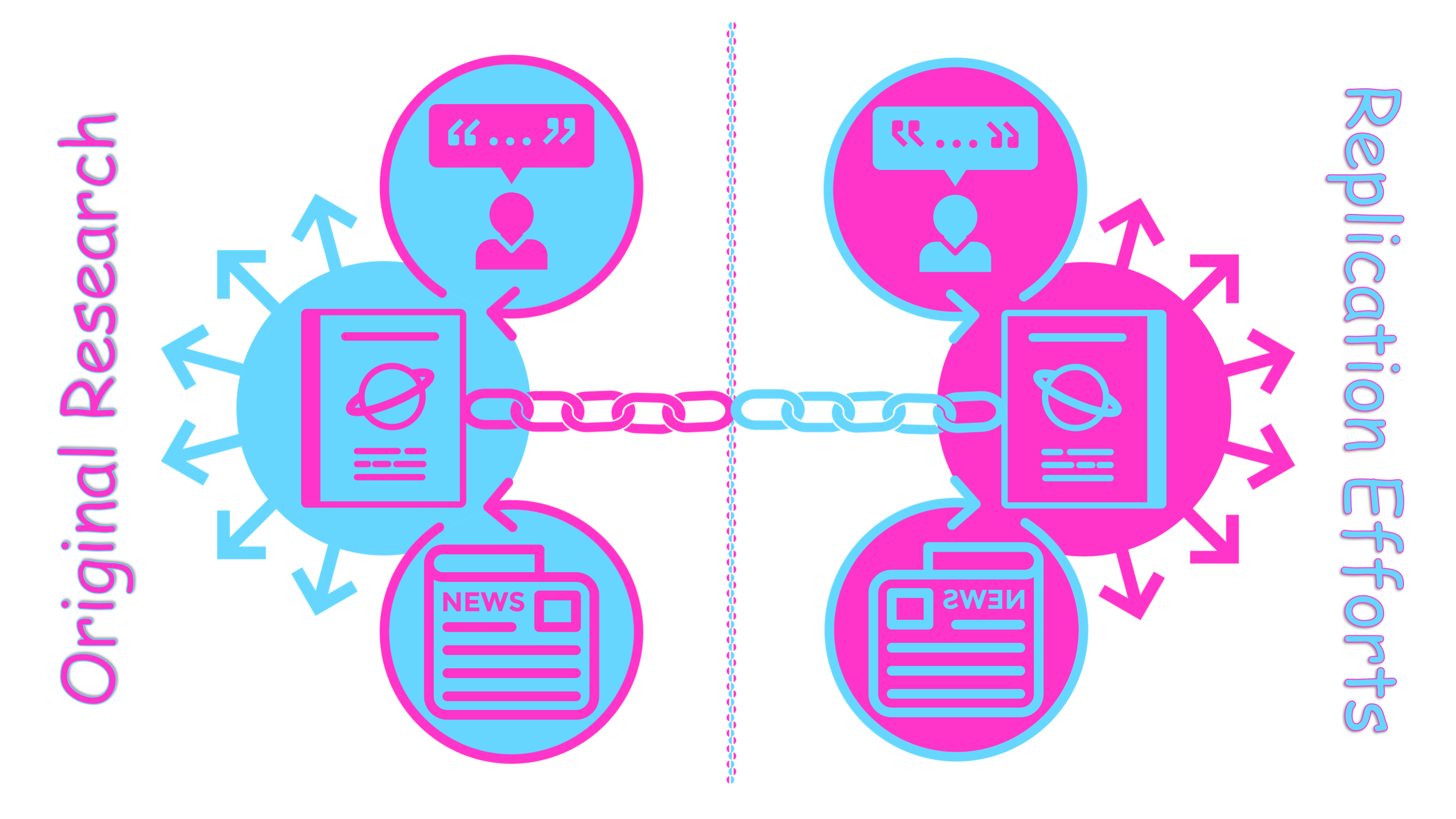

If the reproducibility crisis[1] has revealed one thing, that is the need for change. Continuous growth in science necessitates rethinking the traditional peer review and publication practices. In the past, the print journal format would not have allowed direct links between the original article and its replication. In other words, finding the original article would not lead to replications, but finding a replication would lead to the original article. However, online systems like Crossref could be a solution linking the original research to all such efforts of replication, whether failed or successful, which may in the long run redistribute the rewards, such as citations, that often fall only on the original article. This means, even when a journal decides not to publish a replication of research it has previously published, it should at least be responsible to link the original article to the replication effort. Such a transformation in academic publishing may disturb the power imbalances etched into the system and bring new incentives for doing replications.

This blog post is not merely about a replication effort and Science’s rejection to publish it. It is about a systematic issue that needs to be discussed further. As the story I started with continued to unfold on Twitter, it also revealed that other scientists have tried replicating similar seminal articles to no avail. Scientists should not be learning about unconfirmed findings through PubPeer or social media and certainly they should not get blocked by editors for opening up issues to the public. Increasing “impact factors” by publishing “hot” research but leaving the responsibility to propagate the results of replication efforts to journals with limited reach is a status quo, but not responsible practice. If academic journals and their editorial boards are to continue having the gatekeeper role, they should not be privileged to expand their power through an asymmetrical publication practice that does no good to science, especially at a time alternatives are technically feasible and available.

The author thanks Maximilian Fochler for his valuable feedback as the reviewer of this blog post.

[1] Reproducibility/replicability crisis is an umbrella term for recent observations that in many fields, previous research findings are often not replicable. One interesting case is from psychology, where researchers have been identifying numerous genetic variations that were associated with depression. Recent research [paywall] has shown with larger sample sizes, hundreds of associations do not hold, turning an entire field into a house of cards. There are various meta-level replication studies, often with similar results, such as this major Science paper [paywall] on top articles in three psychology journals, discussed in detail here. The reproducibility crisis is possibly broader than yet observed.

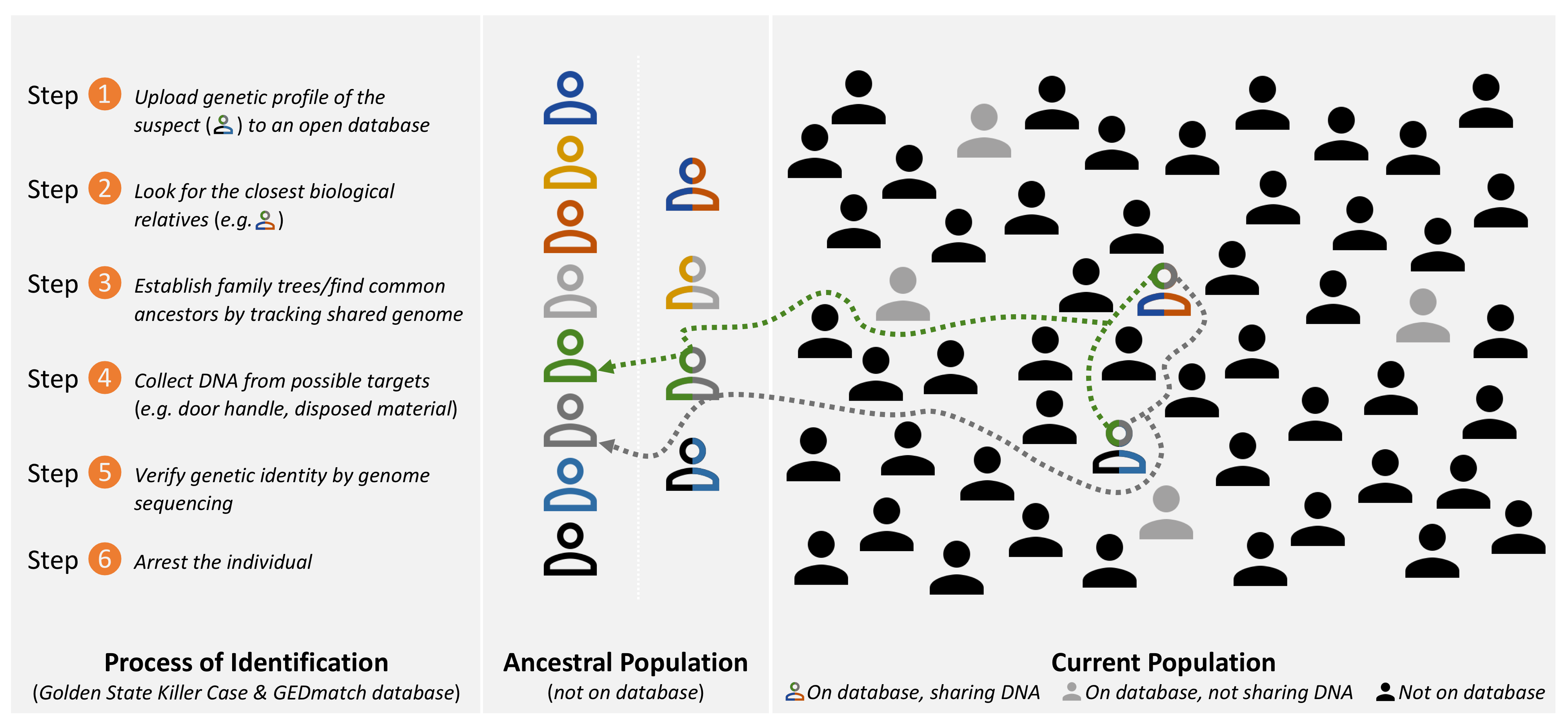

Kaya Akyüz (@KayaAkyuez on Twitter) is a PhD student and uni:docs fellow at the Department of Science and Technology Studies, University of Vienna. Having finished his bachelor and master’s studies in Molecular Biology and Genetics at Bogaziçi University, his current research is on the dynamics of making and unmaking a new field in science through the case of genopolitics, an emerging research field at the intersection of political science and genetics.